In our many advisories over the years with companies going through PCI, some of the challenges we face include this little thing called compensating controls.

It’s a PCI term in many sense. Same as ‘segmentation penetration testing’. It’s when you can’t address the actual control in PCI-DSS for a reason and you need to put other controls to address the ‘spirit’ of the control. The spirit means: why did PCI-DSS put that control in, in the first place?

Immediately, this becomes an outlet of some of the greatest creativity ever existed in our technology field. If everyone involved can channel such creativity of controls into developing innovative tech, we would be far ahead achieving the technology vision 2020 that our country is lagging behind in. As they say: Necessity becomes the mother of invention. So everyone starts inventing controls.

Unfortunately such invention of controls in thin air will likely face a bounced rejection from the QSA which would further cause stress due to the impending deadline and the admission that the compliance will be delayed. So it’s in everyone’s interest that compensating controls are done correctly from the beginning.

Now this article’s purpose is not to go through the whole list of what compensating controls are. There are already thousands of articles that do that. Suffice to say, a compensating control is when you cannot meet the PCI requirements and you still want to be compliant.

So to make it simple:

a. Write which control you can’t address. It may be logging and monitoring, complex password etc.

b. Why can’t you do it? Some good reasons are that the legacy system that runs on the factory store churning out a thousand printed statements a minute, that cannot be patched due to patches no longer being produced. A bad reason is, Bob is new to the company and has no clue what does patching means.

c. Risk Analysis. This isn’t something natural many companies do for IT, but it has to be done. If the system cannot be patched what are the risks? Infection of malware? Internal exploit? Availability problem? Loss of power?

d. Document the control. This has to be done. You can’t just go and say, “OK, QSA, trust us, it’s in.” It needs to be detailed procedures on how the organisation will carry out these compensating controls.

e. Ensure it’s implemented and validate it with the QSA. In fact, we would suggest to bring in the QSA early in the process. Since they are the ones validating your controls, it makes sense to onboard them with the fact that you are doing compensating controls. What you may find acceptable to your risk may not be acceptable to the QSA. It’s not a matter of, “Hey, let me bear the risk this year!”. It’s a matter of “If this thing goes south, who else is going to be affected?” – QSA, banks, companies – because credit card information isn’t just the domain of the company handling it – it has upstream and downstream repercussions – from the payment brands, banks, acquirers TPA, service providers to the customers.

To be honest, compensating is a major pain in the butt. There is no way to describe it better. It’s actually worse that the actual controls. Plus its not a given each year – QSA may decide due to next year’s evolving risks your controls are no longer acceptable!

An example here: say you can’t patch your pre-historic system for a good reason. The vendor has since announced they no longer support that system and a new release is imminent in a year’s time and the notice is for all customers to bear down and wait for the release. At this moment it’s completely out of the customer’s hands.

The compensating controls could be

a) Having a documented notice from the vendor that security patching cannot be done

b) Hosting the system in an isolated VLAN that does not have any other CDE/non-cde systems

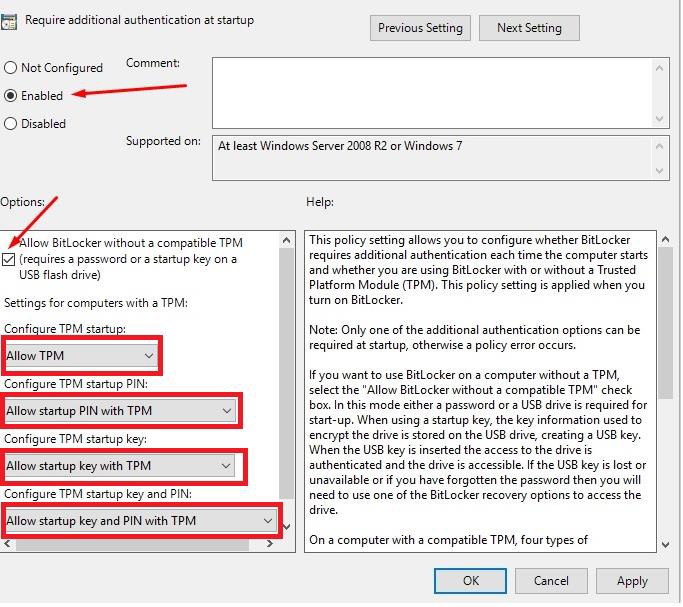

c) MFA needs for ALL access, not just administrative

d) Firewall rules must be specific to port/source/destination

e) Copy/paste, USB etc are disallowed in said system, and any attempts to do that is logged through DLP.

f) Antimalware must be installed and logs monitored specifically

g) Logging and monitoring needs to be reviewed daily specifically for this system and a report daily separately for incidents to this system

Taking a look at the above examples, these are controls that are considered above and beyond what is necessary for PCI requirements. In short, its a lot harder or more expensive to get compensating controls done than for the actual controls, no matter what you may think.

We once had a conversation with a company who were thinking of switching to us from previous consultants. When asked, we realised that many of their systems were not PCI compliant and they had put in compensating controls. Their compensating controls were: “Mitigation plan is in place to replace these systems in a year’s time.”

That’s not a compensating control. That’s something you plan to do in 365 days time. In the meantime, what are you proposing to lower the risk? That’s your compensating controls.

Don’t use the compensating controls as a get out of jail free card. Any consultant/QSA worth their salt would know how difficult it is to get these controls done and more importantly passed for PCI-DSS. In other words, instead of looking at it as a convenient shortcut or workaround, it should be viewed as the last resort.

Drop us a note at pcidss@pkfmalaysia.com for any queries you may have on PCI-DSS and we will respond to that immediately!